All Categories

Featured

Table of Contents

- – The Value of Personalized Interfaces in Contem...

- – When Load Time Improvement Is Critical for Tod...

- – When Responsiveness Optimization Makes a Diff...

- – The Combination of Visual Experience with Dev...

- – When Specialized Development Teams Manages A...

- – Why Prospect Capture Strategies Vary for dif...

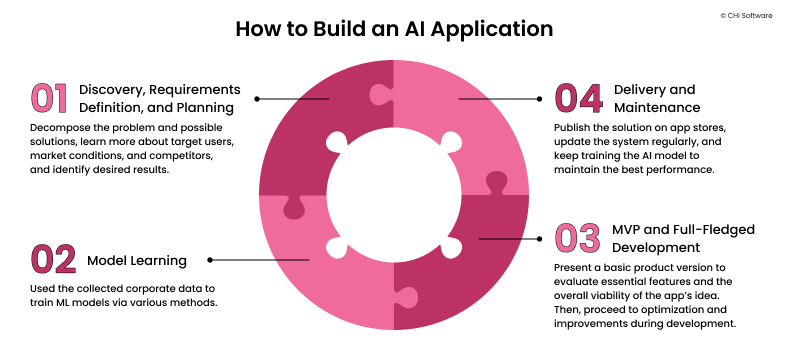

It isn't a marathon that demands study, assessment, and experimentation to establish the role of AI in your service and ensure safe, honest, and ROI-driven remedy release. To help you out, the Xenoss group produced a basic framework, describing exactly how to construct an AI system. It covers the vital considerations, difficulties, and aspects of the AI project cycle.

Your goal is to establish its duty in your operations. The most convenient method to approach this is by going backwards from your goal(s): What do you desire to achieve with AI application?

The Value of Personalized Interfaces in Contemporary Web Solutions

Seek out usage situations where you have actually already seen a convincing presentation of the technology's capacity. In the finance industry, AI has actually confirmed its merit for fraudulence detection. Artificial intelligence and deep learning designs outmatch conventional rules-based fraud discovery systems by offering a lower rate of incorrect positives and showing better outcomes in identifying brand-new sorts of scams.

Scientists concur that artificial datasets can boost privacy and depiction in AI, particularly in sensitive industries such as health care or finance. Gartner predicts that by 2024, as long as 60% of information for AI will be synthetic. All the acquired training data will certainly after that have to be pre-cleansed and cataloged. Use regular taxonomy to develop clear data family tree and after that keep an eye on how various users and systems make use of the provided data.

When Load Time Improvement Is Critical for Today's SEO combined with Visitor Conversion

On top of that, you'll need to split available data right into training, validation, and examination datasets to benchmark the developed design. Fully grown AI development teams complete a lot of the information administration refines with information pipelines an automatic sequence of steps for data ingestion, handling, storage space, and subsequent accessibility by AI designs. Example of data pipe design for data warehousingWith a durable information pipeline architecture, companies can process countless information documents in nanoseconds in near real-time.

Amazon's Supply Chain Financing Analytics team, in turn, enhanced its information design workloads with Dremio. With the present arrangement, the company set brand-new remove change tons (ETL) work 90% faster, while query rate boosted by 10X. This, in turn, made data much more easily accessible for thousands of simultaneous customers and machine discovering tasks.

When Responsiveness Optimization Makes a Difference for Both Organic Visibility and Audience Retention

The training process is complex, as well, and susceptible to issues like sample performance, stability of training, and catastrophic interference troubles, amongst others. Successful business applications are still few and primarily come from Deep Technology firms. are the backbone of generative AI. By utilizing a pre-trained, fine-tuned model, you can rapidly educate a new-gen AI algorithm.

Unlike conventional ML structures for natural language handling, structure designs require smaller sized labeled datasets as they currently have actually installed knowledge throughout pre-training. Training a structure design from scrape additionally needs enormous computational resources.

The Combination of Visual Experience with Development in Current Online Platforms

happens when model training conditions differ from deployment conditions. Properly, the design doesn't produce the wanted outcomes in the target atmosphere due to distinctions in parameters or configurations. occurs when the analytical buildings of the input data transform with time, influencing the design's efficiency. For instance, if the design dynamically maximizes costs based on the overall number of orders and conversion prices, however these criteria considerably alter in time, it will no more provide precise pointers.

Rather, most maintain a data source of model versions and carry out interactive version training to gradually improve the quality of the end product. On standard, AI developers rack concerning 80% of created versions, and just 11% are successfully released to production. is among the essential techniques for training better AI models.

You benchmark the interactions to identify the design version with the greatest accuracy. is an additional important practice. A design with also few attributes battles to adapt to variations in the data, while too many attributes can bring about overfitting and worse generalization. Very correlated attributes can likewise create overfitting and degrade explainability methods.

When Specialized Development Teams Manages Advanced Development Projects

Yet it's also the most error-prone one. Just 32% of ML projectsincluding revitalizing designs for existing deploymentstypically get to deployment. Deployment success across numerous device discovering projectsThe factors for failed implementations differ from absence of executive support for the project due to uncertain ROI to technological difficulties with making certain stable version operations under increased tons.

The team required to make sure that the ML version was very available and served extremely customized referrals from the titles available on the individual gadget and do so for the platform's millions of customers. To make sure high performance, the team determined to program design scoring offline and afterwards offer the outcomes once the customer logs into their device.

Why Prospect Capture Strategies Vary for different Business Types with Web Solutions

Ultimately, effective AI design releases boil down to having efficient procedures. Simply like DevOps concepts of continuous combination (CI) and continuous shipment (CD) boost the deployment of normal software, MLOps enhances the rate, performance, and predictability of AI design releases.

Table of Contents

- – The Value of Personalized Interfaces in Contem...

- – When Load Time Improvement Is Critical for Tod...

- – When Responsiveness Optimization Makes a Diff...

- – The Combination of Visual Experience with Dev...

- – When Specialized Development Teams Manages A...

- – Why Prospect Capture Strategies Vary for dif...

Latest Posts

Implementing Body shop in the Wellness Industry

API-First Development and How It Matters for Future-Proofing

Why Businesses Select Specialized Development Teams for Mission-Critical Digital Transformation

More

Latest Posts

Implementing Body shop in the Wellness Industry

API-First Development and How It Matters for Future-Proofing

Why Businesses Select Specialized Development Teams for Mission-Critical Digital Transformation